tf.compat.v1.math.softplus

Stay organized with collections

Save and categorize content based on your preferences.

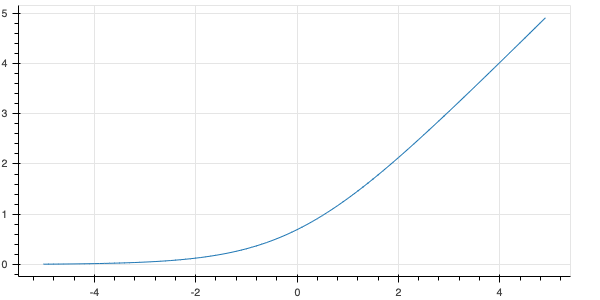

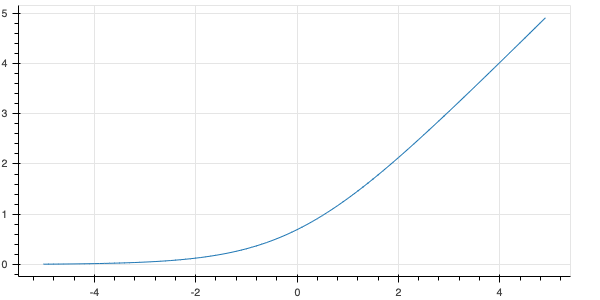

Computes elementwise softplus: softplus(x) = log(exp(x) + 1).

tf.compat.v1.math.softplus(

features, name=None

)

Used in the notebooks

softplus is a smooth approximation of relu. Like relu, softplus always

takes on positive values.

Example:

import tensorflow as tf

tf.math.softplus(tf.range(0, 2, dtype=tf.float32)).numpy()

array([0.6931472, 1.3132616], dtype=float32)

Args |

|---|

features

|

Tensor

|

name

|

Optional: name to associate with this operation.

|

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License, and code samples are licensed under the Apache 2.0 License. For details, see the Google Developers Site Policies. Java is a registered trademark of Oracle and/or its affiliates. Some content is licensed under the numpy license.

Last updated 2024-04-26 UTC.

[[["Easy to understand","easyToUnderstand","thumb-up"],["Solved my problem","solvedMyProblem","thumb-up"],["Other","otherUp","thumb-up"]],[["Missing the information I need","missingTheInformationINeed","thumb-down"],["Too complicated / too many steps","tooComplicatedTooManySteps","thumb-down"],["Out of date","outOfDate","thumb-down"],["Samples / code issue","samplesCodeIssue","thumb-down"],["Other","otherDown","thumb-down"]],["Last updated 2024-04-26 UTC."],[],[]]

View source on GitHub

View source on GitHub